Introduction:

In the previous articles (e.g., Smart OCR), we discussed how a developer could inject an AI service into his/her application to make it perform a smart task such as image recognition and voice recognition. Moreover, we discussed the benefits that are entailed from using AI services such as lower TCO (total cost of ownership) and easier updates. Kindly note that I use AI services and cognitive services interchangeably in this article, so don’t get confused :D.

However, we must admit that the AI services approach has some challenges such as:

Connectivity: Communication back and forth with the cloud consumes bandwidth and requires fast enough connections. Therefore, it could be challenging for low bandwidth networks and slow connectivity devices (think about a slow 2G/3G cellular mobile using your service!).

Compliance: Some organizations have strict data policy for information leaving their borders. This is particularly relevant in the military, security, health, and banking industries. Moreover, those considerations can be nation-wide laws such as GDPR in Europe.

Response time: Intuitively, sending data back and forth to the cloud will take some time; therefore, using AI services can be problematic for time-critical applications.

To face this problem, Microsoft has released some of their AI services in the form of Docker containers that you can run close to your applications and performing AI operations on-premise. However, before discussing that in-depth, let’s take a small detour and speak briefly about docker containers.

Docker Containers:

Docker containers technology makes it easy for developers to create, package and deploy applications using containers. Docker makes it fast to spin up multiple container image instances quickly in a single docker host as illustrated below. A detailed explanation of docker is beyond the scope of this blog post. Kindly refer to relevant docker documentation for more information.

Cognitive Services Docker Images:

To face the AI services on cloud challenges discussed previously, Microsoft has released a few of their cognitive services (more are coming) in the form of docker containers (read more). To the best of my knowledge, Microsoft is the only AIaaS vendor that provides docker containers for their cognitive services. As of now, there exist docker containers for computer vision, LUIS, Face, and text analytics services. Please, make sure that you have all the prerequisites before proceeding with the tutorial.

In this tutorial, I will use Fiddler to compare AI services performance both on cloud and as Docker containers so that you have an objective comparison. If you are not familiar with Fiddler, refer to their documentation. However, it is not required to understand this tutorial, but it is a valuable HTTP headers inspection tool that I would highly recommend playing with.

The tutorial consists of applying sentiment analysis on the following piece of poetry from Ella Wheeler Wilcox.

| Laugh, and the world laughs with you; Weep, and you weep alone; For the sad old earth must borrow its mirth, But has trouble enough of its own. Sing, and the hills will answer; Sigh, it is lost on the air; The echoes bound to a joyful sound, But shrink from voicing care. |

Case 1: The request was made to Azure cognitive service in the cloud using the Microsoft Text Analytics test console.

Case 2: The request was made using the cognitive services docker image. A detailed explanation on how to start your cognitive service docker container shall be provided; it is a prerequisite to have the docker properly configured and setup.

Case 1: AI Services on the Cloud

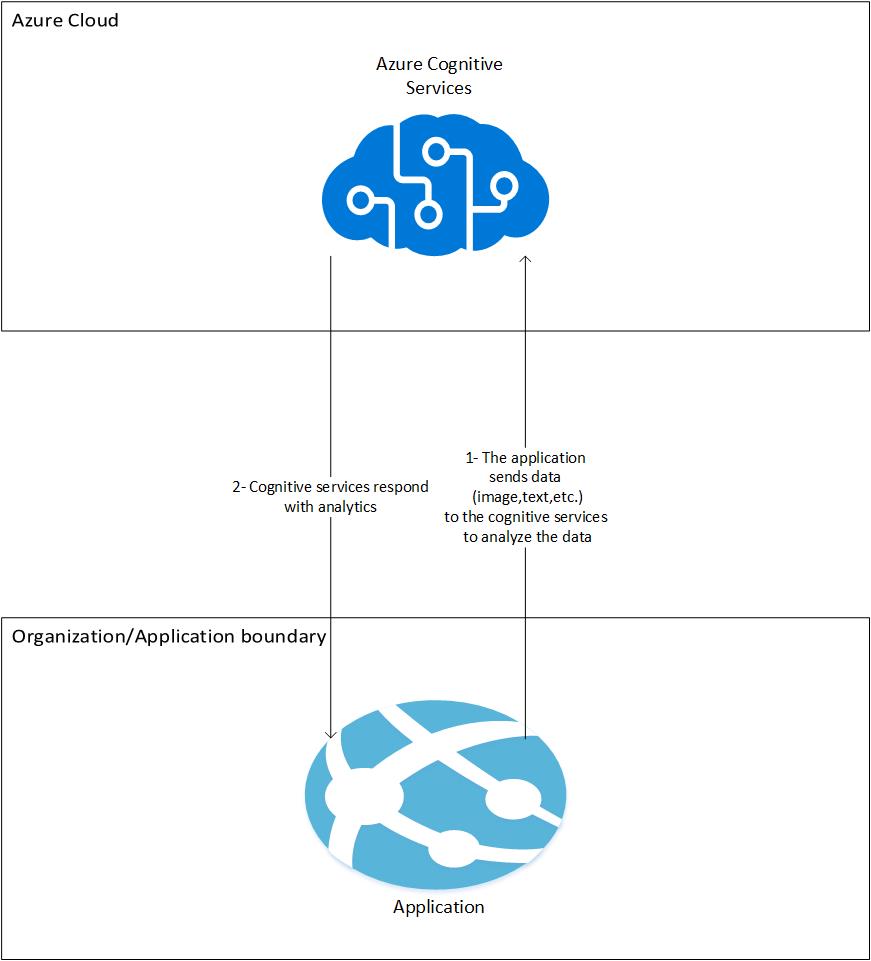

The following picture gives a high-level description of how the AI as a Service operation occurs when deployed in the Azure cloud:

- The application sends the data to be to the cloud to an Azure cognitive service (data leaves organization border)

- Azure cognitive service responds with analysis information.

The image below illustrates the process.

Fiddler:

If we intercept the request using fiddler, we can see that the poetry text is transmitted to azure.microsoft.com domain. The elapsed request time was 2.454 seconds.

Case 2: AI service on a docker image

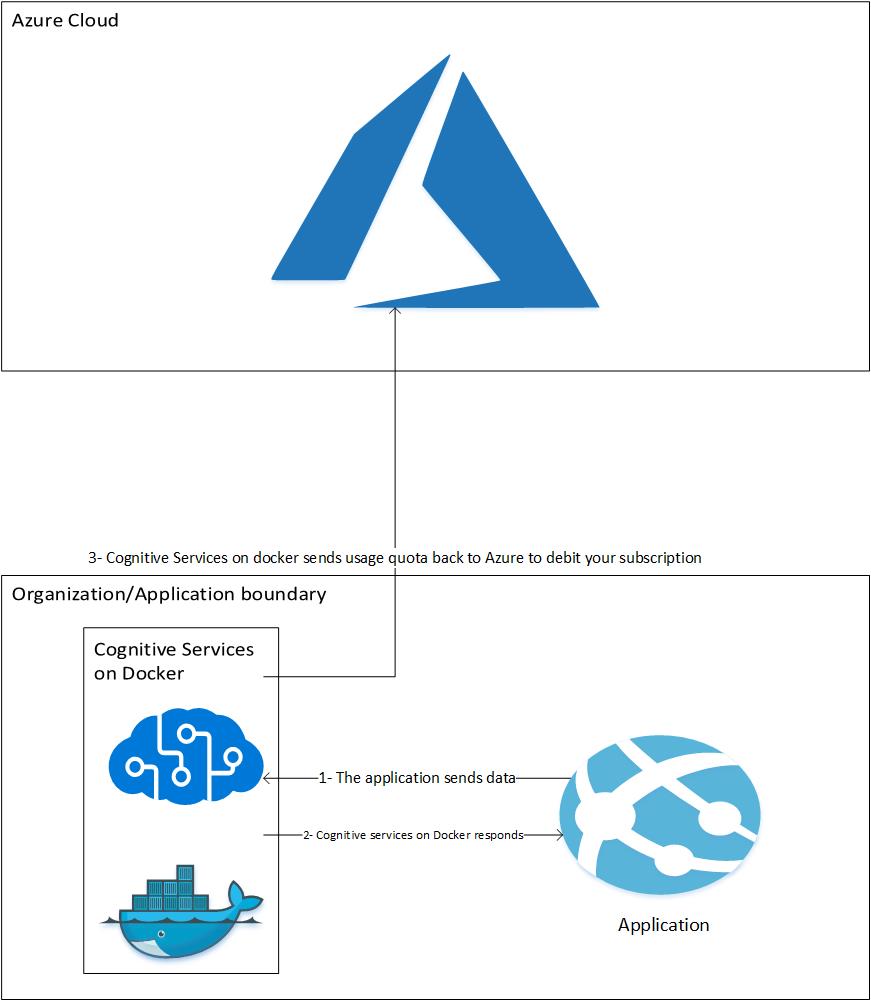

The following picture gives a high-level description of how the AI as a Service operation occurs when deployed as an Azure cognitive service container:

- The application sends data to the Azure cognitive service container on-prem (confidential data does not leave the organization boundary).

- Azure cognitive service container responds with analytics results to the application.

- Azure cognitive service reports back billing information to Azure to debit the subscription (Periodic billing).

First, you need to download the Azure cognitive services sentiment docker image using the following command in PowerShell/Command Line:

docker pull mcr.microsoft.com/azure-cognitive-services/sentimentNext, we will start up our docker container, before doing so, make sure you have Azure cognitive service resource in your cloud. Follow this tutorial from Microsoft.

docker run --rm -it -p 5000:5000 --memory 4g --cpus 1 mcr.microsoft.com/azure-cognitive-services/sentiment Eula=accept Billing={BILLING_ENDPOINT_URI} ApiKey={BILLING_KEY}Replace {BILLING_ENDPOINT_URI} with the Azure cognitive service endpoint and {BILLING_KEY} with Azure cognitive service key, both of them can be found in the Azure portal. This information is needed so that Azure cognitive services on docker can debit your Azure subscription.

After running the commands, you should have the following output (or similar from PowerShell).

And when you navigate to https://localhost:5000

If you can see this, then CONGRATULATIONS, you have cognitive services containers up and running successfully. Click on Service API Description to navigate to Swagger API since the container will be exposing the API as a RESTful endpoint described via Swagger.

Now, we will execute the command via Swagger.

And we get a similar result as in Case 1.

{

"documents": [

{

"id": "1",

"score": 0.16453871130943298

}

],

"errors": []

}Fiddler:

When we use fiddler, we see that the request was sent to localhost:5000, which means that our confidential data does not leave the organization boundary. Moreover, the response time is 0.046 seconds which is more than 50 times faster than case 1. Impressive, isn’t it? ?

If you keep fiddler open for a while, you will see periodic requests to management.azure.com; these requests are performed by Azure cognitive service containers to report back your quota usage. You can inspect them; there is no confidential information has left your prem, I promise! Some of the information is encoded using Base64 encoding; you can decode it using: https://www.base64decode.org/.

What happens when internet connectivity is lost?

If Azure cognitive service containers lose connection to the internet, they will not be able to report usage quota back to Azure. The service will allow some grace period before denying further requests; therefore, make sure to have sustainable internet connectivity. Microsoft wants its money orderly ?.

Conclusion:

In this in-depth tutorial, we had a deep look at Azure cognitive services container benefits, setup, configuration, and usage. Moreover, we analyzed the underlying requests using Fiddler, and we made sure that our confidentiality does not leave the organization's borders. So, guys in data-sensitive domains you can enjoy them!