How Azure Anomaly Detection API allows you to find weirdness inside crowd - Part 2

Introduction

In the previous blog post, we introduced the newly launched anomaly detector service, discussed its use cases, weaknesses and strengths. Today, we will take our discussion deeper and inspect the API request/response model, parameters meaning and how can we have some control over the API.

Using the API

Similar to other cognitive services, anomaly detector relies on a RESTful API to provide its service. Since using RESTful APIs is straight forward for any regular developer and there are plenty of tutorials on the internet on how to use them, I keep the discussion here more focused on the request/response parameters meaning.

Input Data

The input format of the API is a dataset in the form of a JSON file which consists of the following input parameters:

- The granularity of the data: Year, Month, Day, Hour or Minute. Granularity is used to verify whether the time series input format is valid.

- Time series: Consists of pairs of values and UTC time stamps. The minimum time series points are 12 points, and the maximum is 8640 points. It is highly recommended that the data points should be evenly distributed as much as possible so that it is aggregated by units of time (i.e., the interval should be fixed). Missing up to 10% should not negatively impact the detection, however, consider replacing the missing data with statistical methods. The data-points should be sorted in ascending order without duplicate timestamps.

- [Optional] Custom Interval: Used with nonstandard granularities, for example, if the time series is every 5 minutes.

- [Optional] Period: The period of the time series, if not provided, the API will try to figure it out automatically.

- [Optional] Maximum Anomaly Ratio: Advanced model parameter; Between 0 and less than 0.5, it tells the maximum percentage of points that can be determined as anomalies. You can think of it as a mechanism to limit the top anomaly candidates.

- [Optional] Sensitivity: Advanced model parameter, between 0-99, the lower the value is, the larger the margin value will be which means fewer anomalies will be accepted. You can think of it as a mechanism to control “Upper Margin” and “Lower Margin” which will be explained in the output data section.

Here you can find an example with full optional parameters.

{

"granularity":"daily",

"series":[

{

"timestamp":"2018-03-01T00:00:00Z",

"value":32858923

},

{

"timestamp":"2018-03-02T00:00:00Z",

"value":29615278

},

{

"timestamp":"2018-03-03T00:00:00Z",

"value":22839355

}

]

}Table 1 API input data sample with mandatory parameters (no optional parameter is used)

Output Data

The response format from the API is as the following :

- Is Anomaly: Indicates whether the point is an anomaly in positive (spike) or negative (dip) directions.

- Is Positive Anomaly: Indicates that the point is an anomaly in the positive direction which means that the observed value is higher than the expected value.

- Is Negative Anomaly: Indicates that the point is an anomaly in the negative direction which means that the observed value is lower than the expected value.

- Period: Series frequency, a zero value means no recurring pattern was found.

- Expected Value: The expected value of the point.

- Upper Margin: The upper margin of the latest point. Upper Margin is used to calculate Upper Boundary, which equals to Expected Value + (100 – Margin Scale) *Upper Margin.

- Lower Margin: The lower margin of the latest point. Lower Margin is used to calculate Lower Boundary, which equals to Expected Value - (100 – Margin Scale) *Lower Margin.

- [Only in time series] Suggested Window: The suggested input series points needed for detecting the latest position.

Note: The response format of batch API will be a list of items which does not include the suggested window property (See Table 2 and Table 3)

{

"isAnomaly":false,

"isPositiveAnomaly":false,

"isNegativeAnomaly":false,

"period":12,

"expectedValue":809.2328084659704,

"upperMargin":40.46164042329852,

"lowerMargin":40.46164042329852,

"suggestedWindow":49

}Table 2 Last point detection API response sample

{

"expectedValues":[

827.7940908243968,

798.9133774671927,

888.6058431807189

],

"upperMargins":[

41.389704541219835,

39.94566887335964,

44.43029215903594

],

"lowerMargins":[

41.389704541219835,

39.94566887335964,

44.43029215903594

],

"isAnomaly":[

false,

false,

false

],

"isPositiveAnomaly":[

false,

false,

false

],

"isNegativeAnomaly":[

false,

false,

false

],

"period":12

}Table 3 Batch point detection API response sample - Note that suggested window does not exist

Explanation through Visualization

Since A picture worth a thousand words, I believe it would be simpler to have an image that explains how anomaly detection works so that we have a deeper understanding of what is actually happening.

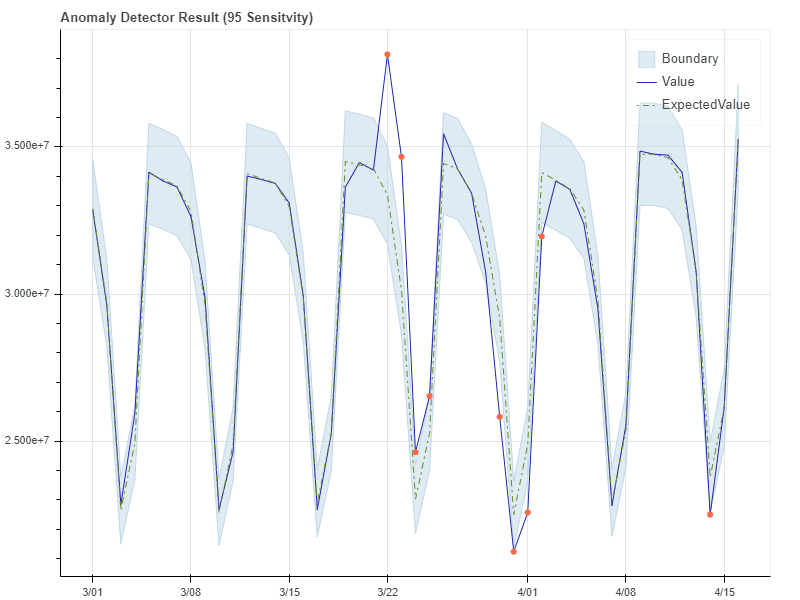

Figure 2 Anomaly detection result visualized, Source

The graph shows the data values in the (y-axis) over time (x-axis). The blue line represents the actual data points supplied, while the green dotted line represents the expected values generated by the anomaly detection model. Next, the anomaly detector generates a boundary (light blue) values around the expected value, the distance between the expected value and the upper boundary is the upper margin while the gap between the predicted value and the lower boundary is called, the lower margin. This boundary represents the acceptable range for data based on the generated AI model. Next, all actual data points are compared against the boundary as the following:

- If the actual value falls within the boundary, it will not be categorized as an anomaly.

- If the actual value is outside the boundary on the upper side, it will be categorized as a positive anomaly (red dot).

- If the actual value lies on the lower side, it will be categorized as a negative anomaly (red dot).

Therefore, we can perform the following actions using the anomaly detector API:

- Learn to predict the expected values based on historical data in the time series

- Tell whether a data point is an anomaly out of a historical pattern

- Generate a band to visualize the range of "normal" value

Factors which will affect the accuracy

It worth mentioning that they are several factors which affect the results such as:

- How time series is prepared

- Anomaly detector parameters used

- The number of data points

In the next section, we will see how can we have some control over the results of the anomaly detector by manipulating two parameters, the sensitivity, and the maximum anomaly ratio.

Taking control

There are two main ways to manipulate the results of the anomaly detection:

Applying the sensitivity (margin scale) parameter

The sensitivity parameter is an advanced model parameter that can be used to adjust the “thickness” of the margin boundary, it is inversely proportional to the margin, the higher the sensitivity, the lower the margin and hence the higher anomalies are accepted and vice versa.

This parameter is hiding lots of anomaly detection algorithms details to make it easy for software developers with no machine learning background. Microsoft has a “single parameter strategy” for their AI services to make it easier for developers to manipulate the outcome of a specific machine learning operation without machine learning knowledge.

Applying the maximum anomaly ratio

This parameter filters out anomalies based on a ratio. For example, if we have a data sample of 100 and the ratio is 0.1, it means that 10 anomalies will be returned at max.

Conclusion and Personal Reflections

Finally, we have reached the end of these two articles series! I believe the anomaly detection API is a nice breakthrough from Microsoft. However, I am still hesitant about is performance under different time series workloads (e.g., exponentially growing time series). Also, a great thing about the anomaly detector service is that it is also available as a Docker container. I have discussed previously the benefits of using Docker containers as host for cognitive services. Please let me know what you think in the comments section. Also, do not forget to follow me on Twitter and subscribe on the mailing list to get the latest and greatest on making your code smart and your career smarter!